Okay, I might as well start drafting out documentation for the PVR file format. 'Pvr' stands for PowerVR, and we can use wikipedia for an explanation of what PowerVR.

PowerVR is a division of

Imagination Technologies (formerly VideoLogic) that develops hardware and software for 2D and

3D rendering, and for

video encoding,

decoding, associated

image processing and

DirectX,

OpenGL ES,

OpenVG, and

OpenCL acceleration. PowerVR also develops

AI accelerators called Neural Network Accelerator (NNA).

Imagination Technologies is a company in the UK. And it looks like the Japanese company NEC licensed the technology from PowerVR to create the GPU / texture format for the Dreamcast. So basically .pvr files are the native texture format for the Dreamcast.

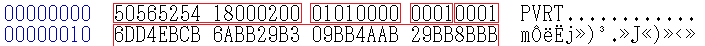

The .pvr image file has a pretty small / simple header that gets pretty complicated pretty fast. Like most other formats we have the IFF header of PVRT followed by the length of the content in the file. So the only header is the 16 bytes after the IFF header. And the format is

[1 byte: color_format]

[1 byte: data_format]

[2 byte: no operation]

[2 byte: image width]

[2 byte: image height]

So in this case we have color format 1, data format 1, width 0x100 (256) height 0x100 (256). So basically it comes down to color_format / data_format. The color format is pretty simple. All colors are 16 bits (2 bytes) with the following enum possibilities:

ARGB_1555 = 0x00

RGB_565 = 0x01

ARGB_4444 = 0x02

YUV_422 = 0x03

BUMP = 0x04

RGB_555 = 0x05

ARGB_8888 = 0x06

YUV_420 = 0x06

While there are 7 options listed I have only seen 0, 1, or 2 used in the entirety of PSO and any other Dreamcast game I've seen. Basically what this creates is a trade off. You have ARGB_1555 which uses 1 bit for transparency, and 5 bits for red, green and blue respectively. I think the way this works is that is you have 0000 then the pixel is fully transparent, however if you have color set and transparency enabled you get 50% transparency. Then you have RGB_565 which has no transparency, which is the mostly commonly used format in PSO. The artists use a lot of black and then liberal use of alpha blending to create most of the transparent effects in PSO. And then last you have ARGB_4444 which has 4 bits for each attribute respectively. This is not used very often because of the limited color palette.

So that's about it for the color. Basically the over all approach for parsing textures is you create a 2d array with the width and height of the texture, and then decode the information so that each element in the array has a 2 byte color value. And then when the image has been decoded for data, you do one pass over every element in the 2d array to convert it from 2 bytes (ARGB_1555, RGB_565, ARGB_4444) to RGBA8888.

Now we can look at the data formats.

# Data Formats

TWIDDLED = 0x01

TWIDDLED_MM = 0x02

VQ = 0x03

VQ_MM = 0x04

PALETTIZE4 = 0x05

PALETTIZE4_MM = 0x06

PALETTIZE8 = 0x07

PALETTIZE8_MM = 0x08

RECTANGLE = 0x09

STRIDE = 0x0B

TWIDDLED_RECTANGLE = 0x0D

ABGR = 0x0E

ABGR_MM = 0x0F

SMALLVQ = 0x10

SMALLVQ_MM = 0x11

TWIDDLED_MM_ALIAS = 0x12

And hole-lee-shit this is where the .pvr format really starts sucking. Because the amount of variation gets pretty crazy. Before we get into that, I have not seen STRIDE or ABGR, ABGR_MM in practical application. So I will not be describing how to decode them here. RECTANGLE I can't remember if i've actually seen this used or not, but it basically means that the data is already in-order in a 2d array and all you have to do is read the values. The main formats used in pvr seem to be Twiddled and VQ. Palette is used rarely, so i'll try to touch on what I know about that as well.

Mipmaps

Everyone might already know what mipmaps are, but I'm kind of an idiot, so I didn't know when I started to get into this stuff.

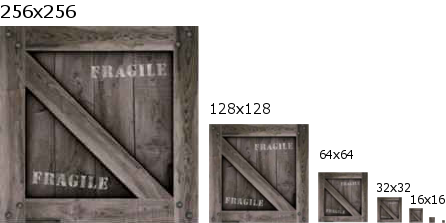

Mipmaps are multiple of the same image stored in the same file. The idea is that is an object is father away in the viewport it doesn't make sense to waste cycles draw the full image for an object in the distance if the object only takes up a few pixels on screen. Pvr contains mipmaps from order of smallest to largest. So to read the fullsize image you'll have to seek passed the smaller images. The size of the image in bytes depends on if the image is Twiddled or VQ.

Twiddled

Twiddled

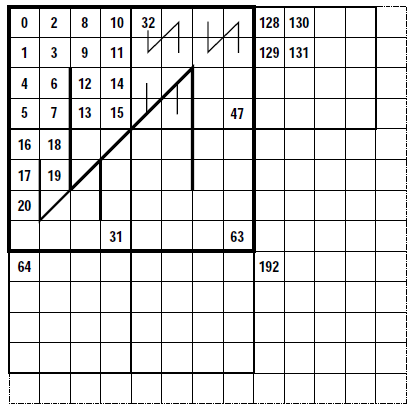

Twiddled is a format that uses a recursive function to copy data from the disk / memory into the framebuffer. The idea is that it's slightly faster than copying the image in-order, and it has some side-positive effects that texcels likely to be used together in uv coordinates are close to each other in memory. This doesn't mean that this file format doesn't suck to work with.

The "intended stats" for working with twiddled is probably something like the following.

Code:

def readTwiddled(file, width, isVq):

list = [None] * (width * width)

subdivideAndMove(0, 0, width)

return list;

def subdivideAndMove(x, y, mipSize):

if mipSize == 1:

if isVq:

list[y * width + x] = file.read(1)

else:

list[y * width + x] = file.read(2)

else:

ns = int(mipSize / 2)

subdivideAndMove(x, y, ns)

subdivideAndMove(x, y + ns, ns)

subdivideAndMove(x + ns, y, ns)

subdivideAndMove(x + ns, y + ns, ns)

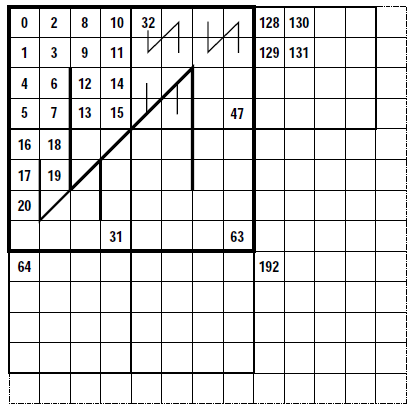

The idea being that there is some kind of performance to be gained by the subdivide and move recursive call. The problem is that in terms of decoding an image from the encoded format to an in-order format, readability is important. So often what the better approach to use is to calculate the position of pixels based on an x or y position so that you can read the pixels in order. So the lookup function is as follows.

Code:

def detwiddle(x, y):

x_pos = 0

y_pos = 0

for i in range(10):

shift = int(math.pow(2, i))

if x & shift :

x_pos |= shift << i

if y & shift :

y_pos |= shift << i

return y_pos | x_pos << 1

The idea is that rather than doing the subivide and move recursive approach. You can use a much simpler in order function to decode the image.

Code:

start_ofs = file.tell()

list = [ None ] * (width * height)

for x in range (width):

for y in range(height):

pos = detwiddle(x, y)

file.seek(start_ofs + pos*2, 0)

list[y*width + x] = file.read(2)

The reason position is multiplied by two is because each pixel is encoded as two bytes.

And that's about it for twiddled. If you have these function you can convert from twiddled to in-order and then you can proceed to convert the colors to RGBA once you have the in-order list.

I'll make a quick mention of rectangle twiddled. Basically twiddled textures can only ever be square, because that's the only way the sub-divide and move function works. To make rectangular textures, the function stacks several square textures next to each other. So the width will be multiples of the height for tall textures. And the height will be multiple of the width for wide textures.

To decode twiddled images you divide into three main groups, tall, wide or square. In the case of square you check to see if there are mipmaps, and seek passed them if they exists. And then last read the pixels to create a 2d array of 2byte color values, and then return the 2d array to be converted into RGBA8888 values to be converted into a png or something.

VQ (Vector quantization)

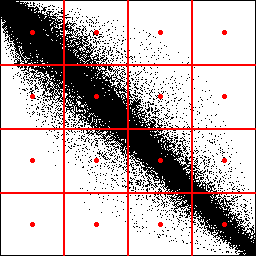

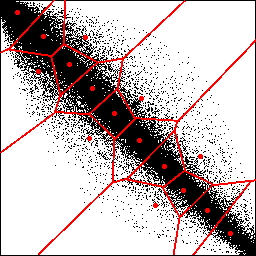

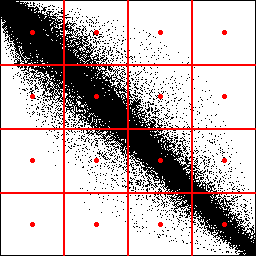

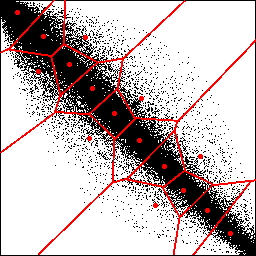

Quick to start off with what Vector quantization indicates, we can basically looks at the following.

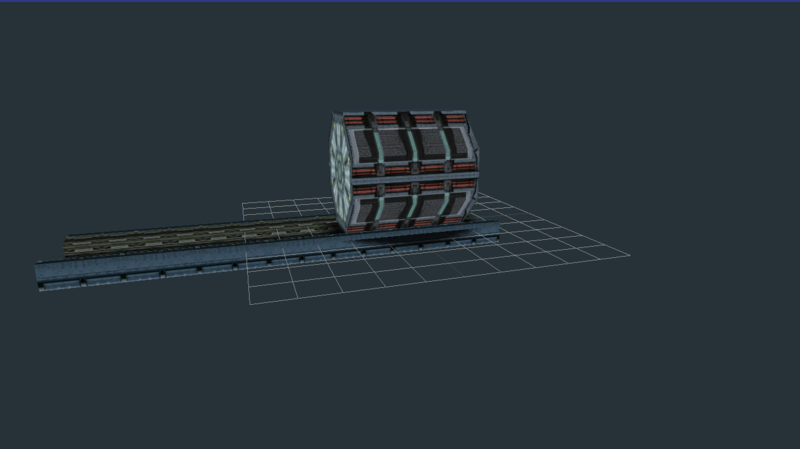

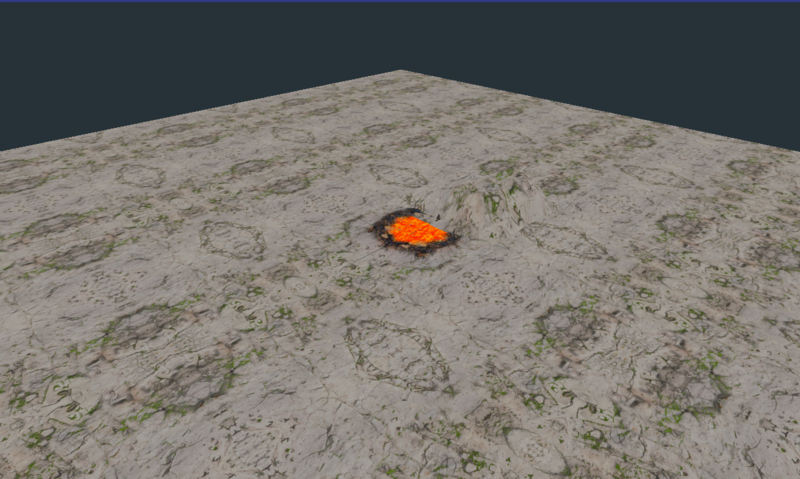

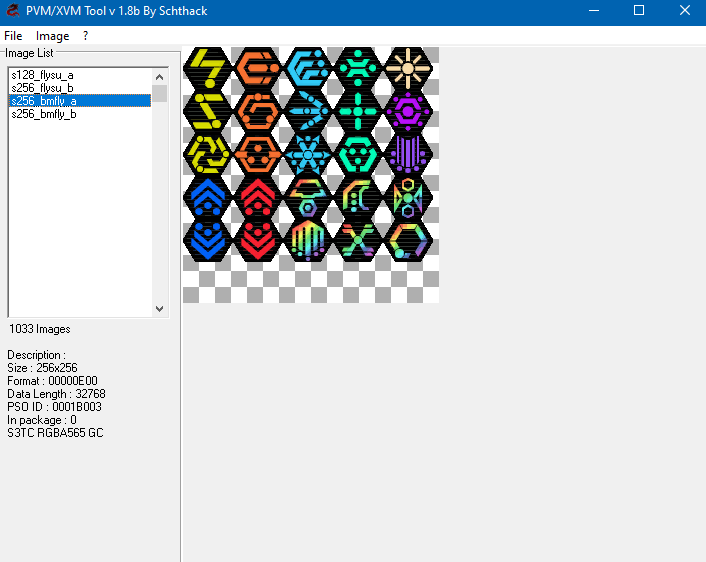

If we were to group this image evenly we would get the following selection.

But if we were to group by distribution we would get the following. So basically what VQ claims to be is a way to compress images around using the most common colors in the most effective grouping. It's generally a pretty generic palette format, and the small sizes of the textures means that the gains from compression are pretty negligent.

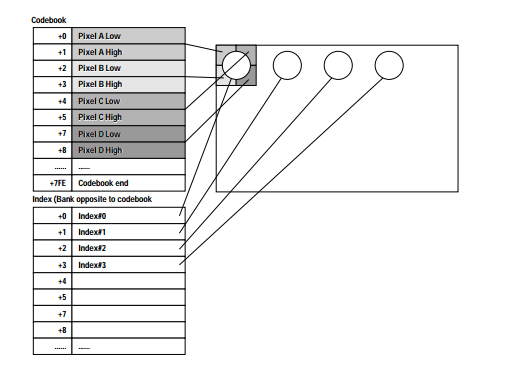

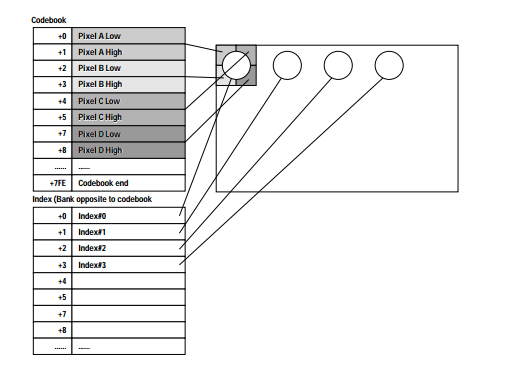

VQ is a compressed file format that has a codebook (normally with 256 entries) that contains 4 pixels. The body of the image is made of single byte indexes that reference the codebook. Effectively it's the same as a normal palette style image, with the exception that in most cases a palette will be a single color for a single pixel, and VQ groups 4 pixels together.

VQ textures are generally structures as follows. First is the codebook on the top. Each entry in the codebook is 8 bytes, consisting of four 2 byte color values that make up four pixels. Generally the codebook defaults to 256 entries. But there are also "small VQ" textures, which will have a different codebook size based with the width of the image and if there are mipmaps or not. Following that is the data for mipmaps, if they are declared. And then finally the encoded data. The way the encoded data works is that it's a sequence of bytes.

Each byte is an entry in the codebook, And since each entry is 4 pixels positioned in a square, this means that there are 1/4 the number of bytes in the data as there are in width and height. Because every other column and every other row is included in one byte entry. Also the byte order inside the VQ image body is also in twiddled order, which means that you need to de-twiddle it to get the bytes in-order.

The approach for reading VQ textures is to first determine the size of the codebook based on if small vq has been declared or not. Then seek passed the mipmaps if they exist. De-twiddle the image body, use each byte for an area of 4 pixels, and then return the in-order 2d array of color values.

Palette

Last is palette textures, which consist of either 16 or 256 color palettes. And the possibility to include mipmaps or not. Palette textures are annoying to work with because you also need to manage the PVP palette file. The other annoying part of palette textures is that they are used only extremely rarely, but they are used. In PSO in think the only place they are used is for some UI elements.

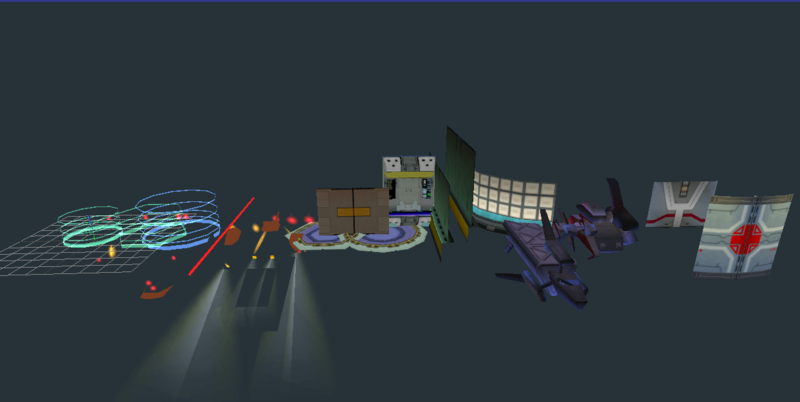

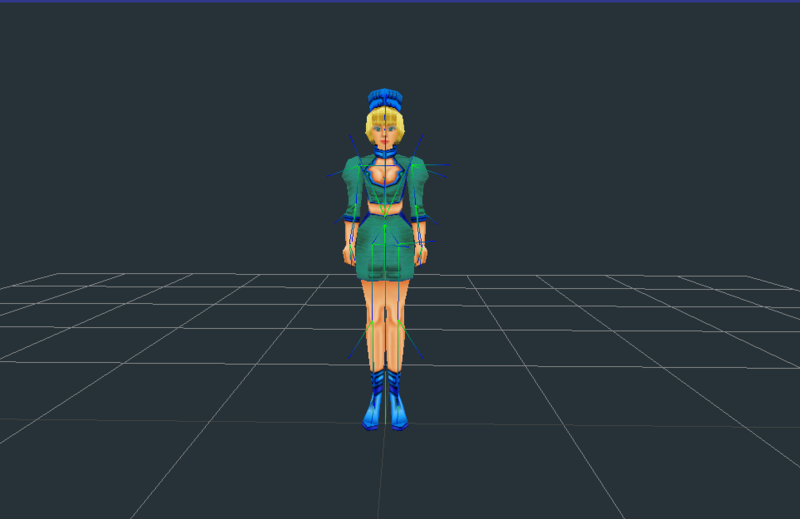

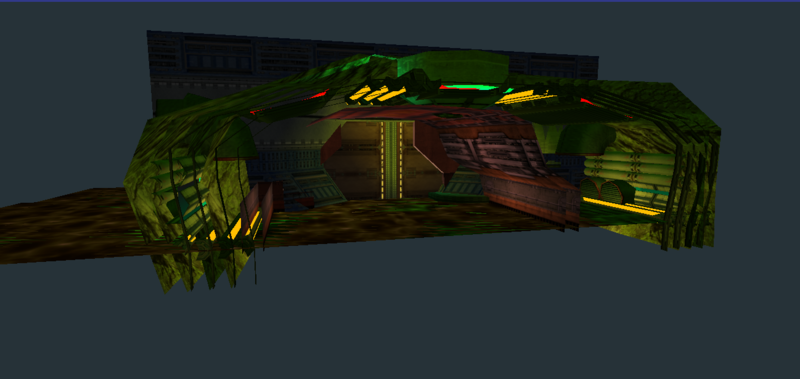

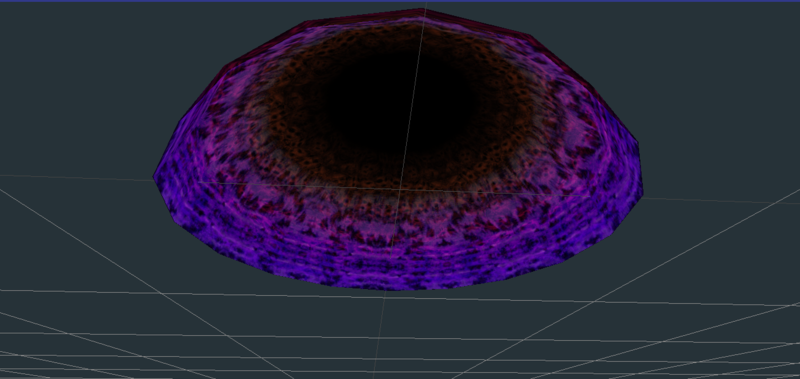

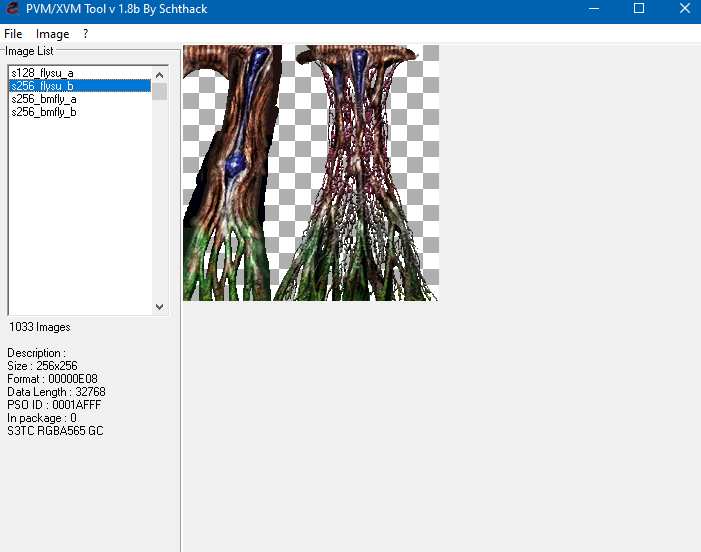

And I pretty much exported this once and then saved the images:

https://imgur.com/a/qurzV

The color palette is included in PVP files. And I think PVP files contain a small header with the color type, and the number of colors. And then there are either 16 or 256 2-byte color values included in the PVP file.

The PVR file that contains the image data will be marked at a palette file. There are two types. For 256 colors, each pixel will be 1 byte. And for 16 colors there will be two pixels per byte split into the upper four bits, and the lower four bits. Each of these will be treated as a reference to the color palette. And I think the order of the data is also twiddled. For 256 colors this is pretty straightforward as each pixel is a reference. For 4 bytes I can't remember if the order is twiddled or not (probably is) because for each byte you have two pixels in a row. So the order is for every-other column.

Summary

I think that does a decent job of generally describing the PVR files and all of the possible combinations I've seen.